fma_test1

(FPCore (t) :precision binary64 (let* ((t_1 (+ 1.0 (* t 2e-16)))) (+ (* t_1 t_1) (- -1.0 (* 2.0 (* t 2e-16))))))

double code(double t) {

double t_1 = 1.0 + (t * 2e-16);

return (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16)));

}

real(8) function code(t)

real(8), intent (in) :: t

real(8) :: t_1

t_1 = 1.0d0 + (t * 2d-16)

code = (t_1 * t_1) + ((-1.0d0) - (2.0d0 * (t * 2d-16)))

end function

public static double code(double t) {

double t_1 = 1.0 + (t * 2e-16);

return (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16)));

}

def code(t): t_1 = 1.0 + (t * 2e-16) return (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16)))

function code(t) t_1 = Float64(1.0 + Float64(t * 2e-16)) return Float64(Float64(t_1 * t_1) + Float64(-1.0 - Float64(2.0 * Float64(t * 2e-16)))) end

function tmp = code(t) t_1 = 1.0 + (t * 2e-16); tmp = (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16))); end

code[t_] := Block[{t$95$1 = N[(1.0 + N[(t * 2e-16), $MachinePrecision]), $MachinePrecision]}, N[(N[(t$95$1 * t$95$1), $MachinePrecision] + N[(-1.0 - N[(2.0 * N[(t * 2e-16), $MachinePrecision]), $MachinePrecision]), $MachinePrecision]), $MachinePrecision]]

\begin{array}{l}

\\

\begin{array}{l}

t_1 := 1 + t \cdot 2 \cdot 10^{-16}\\

t\_1 \cdot t\_1 + \left(-1 - 2 \cdot \left(t \cdot 2 \cdot 10^{-16}\right)\right)

\end{array}

\end{array}

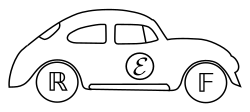

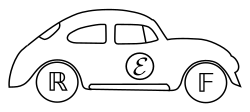

Sampling outcomes in binary64 precision:

Herbie found 2 alternatives:

| Alternative | Accuracy | Speedup |

|---|

(FPCore (t) :precision binary64 (let* ((t_1 (+ 1.0 (* t 2e-16)))) (+ (* t_1 t_1) (- -1.0 (* 2.0 (* t 2e-16))))))

double code(double t) {

double t_1 = 1.0 + (t * 2e-16);

return (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16)));

}

real(8) function code(t)

real(8), intent (in) :: t

real(8) :: t_1

t_1 = 1.0d0 + (t * 2d-16)

code = (t_1 * t_1) + ((-1.0d0) - (2.0d0 * (t * 2d-16)))

end function

public static double code(double t) {

double t_1 = 1.0 + (t * 2e-16);

return (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16)));

}

def code(t): t_1 = 1.0 + (t * 2e-16) return (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16)))

function code(t) t_1 = Float64(1.0 + Float64(t * 2e-16)) return Float64(Float64(t_1 * t_1) + Float64(-1.0 - Float64(2.0 * Float64(t * 2e-16)))) end

function tmp = code(t) t_1 = 1.0 + (t * 2e-16); tmp = (t_1 * t_1) + (-1.0 - (2.0 * (t * 2e-16))); end

code[t_] := Block[{t$95$1 = N[(1.0 + N[(t * 2e-16), $MachinePrecision]), $MachinePrecision]}, N[(N[(t$95$1 * t$95$1), $MachinePrecision] + N[(-1.0 - N[(2.0 * N[(t * 2e-16), $MachinePrecision]), $MachinePrecision]), $MachinePrecision]), $MachinePrecision]]

\begin{array}{l}

\\

\begin{array}{l}

t_1 := 1 + t \cdot 2 \cdot 10^{-16}\\

t\_1 \cdot t\_1 + \left(-1 - 2 \cdot \left(t \cdot 2 \cdot 10^{-16}\right)\right)

\end{array}

\end{array}

(FPCore (t) :precision binary64 (* (* (* t 2e-16) t) 2e-16))

double code(double t) {

return ((t * 2e-16) * t) * 2e-16;

}

real(8) function code(t)

real(8), intent (in) :: t

code = ((t * 2d-16) * t) * 2d-16

end function

public static double code(double t) {

return ((t * 2e-16) * t) * 2e-16;

}

def code(t): return ((t * 2e-16) * t) * 2e-16

function code(t) return Float64(Float64(Float64(t * 2e-16) * t) * 2e-16) end

function tmp = code(t) tmp = ((t * 2e-16) * t) * 2e-16; end

code[t_] := N[(N[(N[(t * 2e-16), $MachinePrecision] * t), $MachinePrecision] * 2e-16), $MachinePrecision]

\begin{array}{l}

\\

\left(\left(t \cdot 2 \cdot 10^{-16}\right) \cdot t\right) \cdot 2 \cdot 10^{-16}

\end{array}

Initial program 3.4%

Taylor expanded in t around 0

*-commutativeN/A

lower-*.f64N/A

unpow2N/A

lower-*.f6499.3

Applied rewrites99.3%

Applied rewrites99.5%

Final simplification99.5%

(FPCore (t) :precision binary64 (* (* 4e-32 t) t))

double code(double t) {

return (4e-32 * t) * t;

}

real(8) function code(t)

real(8), intent (in) :: t

code = (4d-32 * t) * t

end function

public static double code(double t) {

return (4e-32 * t) * t;

}

def code(t): return (4e-32 * t) * t

function code(t) return Float64(Float64(4e-32 * t) * t) end

function tmp = code(t) tmp = (4e-32 * t) * t; end

code[t_] := N[(N[(4e-32 * t), $MachinePrecision] * t), $MachinePrecision]

\begin{array}{l}

\\

\left(4 \cdot 10^{-32} \cdot t\right) \cdot t

\end{array}

Initial program 3.4%

Taylor expanded in t around 0

*-commutativeN/A

lower-*.f64N/A

unpow2N/A

lower-*.f6499.3

Applied rewrites99.3%

Taylor expanded in t around 0

unpow2N/A

associate-*r*N/A

lower-*.f64N/A

*-commutativeN/A

lower-*.f6499.3

Applied rewrites99.3%

Final simplification99.3%

(FPCore (t) :precision binary64 (let* ((t_1 (+ 1.0 (* t 2e-16)))) (fma t_1 t_1 (- -1.0 (* 2.0 (* t 2e-16))))))

double code(double t) {

double t_1 = 1.0 + (t * 2e-16);

return fma(t_1, t_1, (-1.0 - (2.0 * (t * 2e-16))));

}

function code(t) t_1 = Float64(1.0 + Float64(t * 2e-16)) return fma(t_1, t_1, Float64(-1.0 - Float64(2.0 * Float64(t * 2e-16)))) end

code[t_] := Block[{t$95$1 = N[(1.0 + N[(t * 2e-16), $MachinePrecision]), $MachinePrecision]}, N[(t$95$1 * t$95$1 + N[(-1.0 - N[(2.0 * N[(t * 2e-16), $MachinePrecision]), $MachinePrecision]), $MachinePrecision]), $MachinePrecision]]

\begin{array}{l}

\\

\begin{array}{l}

t_1 := 1 + t \cdot 2 \cdot 10^{-16}\\

\mathsf{fma}\left(t\_1, t\_1, -1 - 2 \cdot \left(t \cdot 2 \cdot 10^{-16}\right)\right)

\end{array}

\end{array}

herbie shell --seed 2024272

(FPCore (t)

:name "fma_test1"

:precision binary64

:pre (and (<= 0.9 t) (<= t 1.1))

:alt

(! :herbie-platform default (let ((x (+ 1 (* t 1/5000000000000000))) (z (- -1 (* 2 (* t 1/5000000000000000))))) (fma x x z)))

(+ (* (+ 1.0 (* t 2e-16)) (+ 1.0 (* t 2e-16))) (- -1.0 (* 2.0 (* t 2e-16)))))